Enabling Chargeback in a Service Provider Cloud

I have blogged extensively about Hyper-V, VMM and Katal

lately, and this blog post is part of this unstructured blog series, where I

today will focus on chargeback.

Prior to configuring Katal, we must have the basic

components in place

·

Windows Server 2012

·

Hyper-V

·

System Center Virtual Machine Manager 2012 SP1

·

System Center Orchestrator 2012 SP1

·

SPF (Service Provider Foundation – a part of

Orchestrator in SP1)

As part of the SP1 release, Microsoft introduced a

framework for Chargeback.

The direct link is as follows:

SCVMMàSCOMàSCSM

1. You

must have your private clouds configured in Virtual Machine Manager. In order

to get Katal up and running, this is key.

2. You

must integrate SCOM and SCVMM so that the configuration items and objects are

discovered by SCOM, and monitored. This is important since SCOM will give you

some reports for the chargeback solution. To import the Chargeback solution,

read the rest of this blog post.

3. SCSM

will get the configuration items from both SCOM and SCVMM and let you create

price sheets that you can associate with your clouds.

Now, the third step mentioned here might not necessarily

be a requirement to have a qualified chargeback solution. However, Service

Manager may give you some additional features and dynamic, as well as extended

reporting capabilities. This blog post will not include Service Manager, but be

limited to SCVMM and SCOM to get the most out of it.

Step 1:

Configuring the Fabric in VMM

The links above contains relevant information and guides

on how to configure the Fabric resources with all the new capabilities in

Windows Server 2012.

Step 2: Create a

Private Cloud in VMM

Follow these steps to create a private cloud in Virtual

Machine Manager 2012 SP1.

In ‘VMs and Services’, click ‘Create Cloud’ from the

ribbon. This will launch the cloud wizard.

Assign a name and eventually a description for your cloud

Select which resources should be available, at the host

group level. If you have added a vCenter and VMware infrastructure to VMM, you

can also add a VMware resource pool.

Select logical networks. In terms of abstraction and the

new way to do networking in VMM, it’s important to select a logical network

that is associated to a VM network. The tenants will access the VM networks in

the portal, and you must create them whether you like it or not. A VM network

can either have isolation (with network virtualization) or not, which is using

the logical network directly.

Select Load Balancers. Currently in Katal, there is no

support of deploying Services. Only virtual machines.

Select VIP templates. VIP profiles is to no use if you’re

not dealing with services. If this is a cloud that should be accessed by App

Controller as well, both load balancers and VIP templates will be relevant

since App Controller supports services.

Port Classifications. The classifications that should be

interested to make available in a cloud, would be the classifications

associated with VM networks. Choose all that apply.

Storage. Specify which storage classification that should

be available in this private cloud.

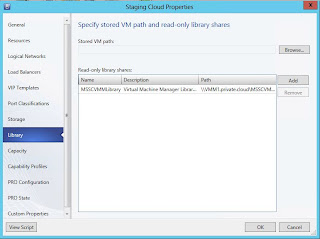

Library. Choose a read-only library and eventually a

library where the tenantes can store their virtual machines. For Katal, this

may be too much to expose, and you can just use the templates you make available

to Katal afterwards, if that is appropriate.

Capacity. Configure the elasticity of this private cloud.

If you don’t specify any values here, you can scope the quota in Katal

afterwards.

Click finish once you are done.

Step 3: Create

Plans in Katal for the tenants

Logon to your Service Management portal to create Plans.

To access the Service Management portal, you must use the

correct port as you specified during installation. Default is 30091, and the tenant portal is using 30081.

Navigate to ‘Plans’ in the Service Management portal and

click ‘New’, and ‘Create’.

Select a friendly name for your plan and click next.

Select services for a hosting plan. In my case, I only

want to provide a virtual machine cloud.

Once you’re satisfied, click finish.

Back to the portal, we can se that we have a new plan,

but there’s still some configuration to do.

Before tenants can subscribe to the plan, we need to make

it public. Click on the plan to configure it.

You will see ‘plan services’. Click on ‘Virtual Machine

Clouds’ to configure the plan.

Configure the plan to connect to both your ‘Cloud

Provider’ which is the SPF server. In my case, I’m using Orchestrator and SPF

on a single machine.

Configure the Virtual Machine Cloud. The cloud I created

in VMM will be visible here, and I can use it together with Katal.

If you scroll down further, you must also specify the

quota, templates, hardware profiles, networks and actions that should be

available for the tenants.

Once you’re done, click save, and then click ‘Make public’.

Navigate back to ‘Plans’ in the Service Management portal

and verify that the newly created plan is Public.

I have now created a plan in Katal that is exposing my

cloud in VMM, ready for tenants to subscribe and create virtual networks and

virtual machines.

Note that there’s

several other options during these steps, like advertising, invitation code and

different control mechanisms that I won’t cover in this blog post, but it’s

worth to take a closer look at for real world deployments.

Step 4: Integrate

SCOM and SCVMM

Once you have the pre-req in place for SCOM and SCVMM

integration (IIS, Windows Server and SQL MPs, SCOM console installed on SCVMM

server), you can setup the integration within the SCVMM console.

Navigate to ‘Settings’ in the SCVMM console

Click on ‘System Center Settings’ and launch the ‘Operations

Manager Server’ wizard.

The first page will tell you what you need to have in

place prior to running this configuration.

Specify the server name of your SCOM server, and the

credentials to access the management group. I have given my SCVMM service

account the required permission in my lab

environment.

Take actions if you want to enable PRO and integration

for maintenance mode between SCVMM and SCOM. I recommend you to enable both to

get the most value from this integration. Click next once you’re done.

Configure connection from SCOM to SCVMM. I use the same

credentials here, since SCOM will use my SCVMM service account when connecting

to the SCVMM server.

Click next and finish, and SCOM will import the SCVMM MPs

from SCVMM during this process.

To verify the integration afterwards, review the log in

SCVMM, check the Operations Manager connection, and also see in the monitoring

pane in SCOM that the MP is viewing data from SCVMM.

Step 5: Install

Chargeback report files on the Operations Manager management server

Log on to the Operations Manager management server.

In the Chargeback folder, copy the subfolder named

Dependencies from the Service Manager management server to the Operations

Manager management server.

On the Operations Manager management server, start Windows PowerShell

and then navigate to the Dependencies folder. For example, type cd Dependencies.

If you have not already set execution policy to

remotesigned, then type the following command, and then press ENTER:

Type the

following command, and then press ENTER to run the PowerShell script that

imports chargeback management packs and that add chargeback functionality to

Operations Mananger:

.\ImportToOM.ps1

After the script has completed running, type exit, and then press ENTER to

close the Administrator:

Windows PowerShell window.

Ensure that Operations Manager has discovered information

from virtual machine manager such as virtual network interface cards, virtual

hard disks, clouds, and virtual machines.

Step 6: Viewing

Chargeback reports in Operations Manager

Navigate to the Reporting pane in Operations Manager Console.

Find the System Center 2012 Virtual Machine Manager

reports and launch the ‘Chargeback’ report.

Before running the report, you must include some data.

Choose between hosts, services, VMs, clouds and so on, and specify the date. To

get an overview of the costs associated with these resources, you can specify

cost for memory, CPU, VM and storage classification.

Run the report once you are ready.

Summary

Hopefully this blog post showed how you can have a

chargeback solution with System Center and Katal. Once the tenants starts to

subscribe on a plan that again is connected to a cloud in VMM, you can easily

run reports towards that cloud to get an overview of how much resources they

are consuming.