Earlier this year, I started to go deep into DSC to learn

more about the concept, possibilities and most important, how we can improve

what we already have and know, using this new approach of modeling.

Desired State Configuration is very interesting indeed –

and to fully embrace it you need to be comfortable with Powershell. Having that

said, Desired State Configuration can give you some of what you are requiring today, but not everything.

Let me spend some minutes trying to explain what I am

actually saying here.

If you want to use DSC as your primary engine, the

standard solution to configure and deploy applications and services across

clouds throughout the life cycle, there is nothing there to stop you from doing

so.

However, given the fact that in many situations, you

won’t be the individual who’s ordering the application, server and

dependencies, it is important that we can make this available in a world full

of tenants with a demand for self-servicing.

Looking back at how we used to do things before to handle

the life-cycle management of applications and infra, I think it is fair to say

it was something like this (in context of System Center):

1) We

deployed a Virtual Machine based on a VM Template using SCVMM

We either

a) Manually

installed and configured applications and services within the guest post VM

deployment

b) Used

SCCM to install agents, letting the admin interact with the OS to install and

configure applications using a central management solution

2) If

we wanted to provide monitoring, we then used SCOM to roll out the agents to

our servers and configured them to report to their management group

3) Finally

yet importantly, we also wanted to be secure and have a reliable set of data. That’s

why we also added backup agents to our servers using SCDPM

In total, we are talking about 4 agents here (SCVMM,

SCCM, SCOM and SCDPM).

That is a lot.

Also note that I didn’t specify any version of System

Center, so this was probably even before we started to talk about Private

Clouds (introduced with System Center 2012).

And that’s the next

topic, all of this in the context of cloud computing.

If we take a walk down the memorial lane, we can see some

of Microsoft’s least proud moments, all the attempts in order to bring the

private cloud a fully functional self-service portal.

-

We’ve had several self-service portals for VMM

that later was replaced by different solutions, such as Cloud Service Process

Pack and App Controller

-

Cloud Service Process Pack – which was

introduced with SC 2012 – where all the components were merged into a single

license, giving you out-of-the-box functionality related to IaaS.

The solution was one of the

worst we have seen, and the complexity to implement it was beyond what we have

seen ever since.

-

AppController was based on Silverlight and gave

us the “single-pane of glass” vision for cloud management. With a connector to

Azure subscriptions (IaaS) and to private and service provider clouds (using

SPF), you could deploy and control your services

and virtual machines using this console

The concept of service templates was introduced in System

Center 2012 – Virtual Machine Manager, and if we go back to my list of actions

we needed to perform, we could say that service templates at some point would

replace the need of SCCM.

Service Templates was an extension to the VM template. It

gave us the possibility to design, configure and deploy multi-tier applications

– and deploy it to our private clouds.

However, I have to admit that back then; we did not see

much adoption of service templates. Actually, we did not see some serious

adoption before Microsoft started to push some pre-configured service templates

on their own, and that happened last year – at the same time as their Gallery

Items for Azure Pack was released.

To summarize, the service template concept (which was

based on XML) gave the application owners and the fabric administrators a chance

to interact to standardize and deploy complex applications into the private

clouds, using AppController. In the same sentence there we found AppController

(Silverlight) and XML.

If we quickly turn to our “final destination”, Microsoft

Azure, we can see that those technologies aren’t the big bet in any

circumstances.

VM Roles are

replacing service templates in the private cloud through Windows Azure Pack.

A VM Role is based on JSON – and define a virtual machine

resource that tenants can instantiate and scale according to their

requirements.

We have in essence two JSON files. One for the resource

definition (RESDEF) and one for the resource extension (RESEXT).

The resource definition describes the virtual machine

hardware and instantiation restrictions, while the resource extension definition

describes how a resource should be provisioned.

In order to support user input in a user friendly way, we

also have a third JSON file – the view definition (VIEWDEF), which provides the

Azure Pack details about how to let the user customize the creation of a VM

Role.

These files are contained in a package, along with other

files (custom resources, logo’s etc) that describe the entire VM Role.

You might ask yourself why I am introducing you to

something you already know very well, or why I am starting to endorse JSON. The

answer lays in the clouds.

If you have every played around with the Azure preview

portal, you have access to the Azure Resource Manager.

ARM introduced an entirely new way of thinking about you

resources. Instead of creating and managing individual resources, you are

defining a resource model of your

service – to create a resource group with different resources that are

logically managed throughout the entire life cycle.

-

And guess

what?

The Azure Resource Manager Templates is based on JSON,

which describes the resources and associated deployment parameters.

So to give you a short summary so far:

Service Templates was great when it came with SCVMM 2012.

However, based on XML and AppController for self-service, it wasn’t flexible

enough, nor designed for the cloud.

Because of a huge focus on consistency as part of the

Cloud OS vision by Microsoft, Windows Azure Pack was brought on-premises and

should help organizations to adopt the cloud at a faster cadence. We then got

VM Roles that should be more aligned with the public cloud (Microsoft Azure),

compared to service templates.

So we might (so far) end up with a conclusion that VM

Roles is here to stay, and if you are focusing too much on service templates

today, you need to reconsider that investment.

The good, the bad

and the ugly

So far, the blog post has been describing something

similar to a journey. Nevertheless, we have not reached the final destination

yet.

I promised you a blog post about DSC, SMA and VM Roles,

but so far, you have only heard about the VM Roles.

Before we proceed, we need to be completely honest about

the VM Roles to understand the requirement of engineering here. To better

understand what I am talking about, I am comparing a VM Role with a stand-alone

VM based on a VM Template:

As you can see, the VM Role gives us very much more

compared to a stand-alone VM from a VM template. A VM Role is our preferred

choice when we want to deploy applications in a similar way as a service

template, but only as single tiers. We can also service the VM Role and scale

it on demand.

A VM on the other hand, lacks all these fancy features.

We can purely base a stand-alone VM on a VM Template, giving us a pre-defined

HW template in VMM with some limited settings at the OS level.

However, please note that the VM supports probably the

most important things for any production scenarios: backup and DR.

That is correct. If you use backup and DR together with a

VM Role, you will end up in a scenario where you have orphaned objects in Azure

Pack. This will effectively break the relationship between the VM Role

(CloudService in VMM) and its members. There is currently no way to recover

from that scenario.

This got me

thinking.

How can we leverage the best from both worlds? Using VM

Role as the engine that drives and creates the complexity here, supplemented by

SMA and Desired State Configuration to perform the in-guest operations into

normal VM templates?

I ran through the scenario with a fellow MVP, Stanislav

Zhelyazkov and he nodded and agreed. “-This

seems to be the right thing to do moving forward, you have my blessing” he

said.

The workflow

This is where it all makes sense. To combine the beauty

of VM Roles, DSC and SMA to achieve the following scenario:

1) A

tenant logs on to the tenant portal. The subscription includes the VM Cloud

resource provider where the cloud administrator has added one or more VM Roles.

2) The

VM Role Gallery shows these VM Roles and provides the tenant with instructions

on how to model and deploy the application.

3) The

tenant provides some input during the VM Role wizard and the VM Role deployment

starts

4) In

the background, a parent runbook (SMA) that is linked to the event in the

portal kicks in, and based on the VM Role the tenant chose, it will invoke the

correct child runbook.

5) The

child runbook will deploy the (stand-alone) VMs necessary for the application

specified in the VM Role, join them to the proper domain (if specified) and

automatically add them to the tenant subscription.

6) Once

the stand-alone VMs are started, the VM Role resource extension kicks in (which

is the DSC configuration, using push) that based on the parameters and inputs

from the tenant is able to deploy and model the application entirely.

7) Once

the entire operation has completed, the child runbook will clean-up the VM Role

and remove it from the subscription

In a nutshell, we have achieved the following with this

example:

1) We

have successfully been able to deploy and model our applications using the

extension available in VM Roles, where we are using Desired State Configuration

to handle everything within the guests (instead of normal powershell scripts).

2) We

are combining the process in WAP with SMA Runbooks to handle everything outside

of the VM Role and the VMs.

3) We

are guaranteed a supported life-cycle management of our tenant workloads

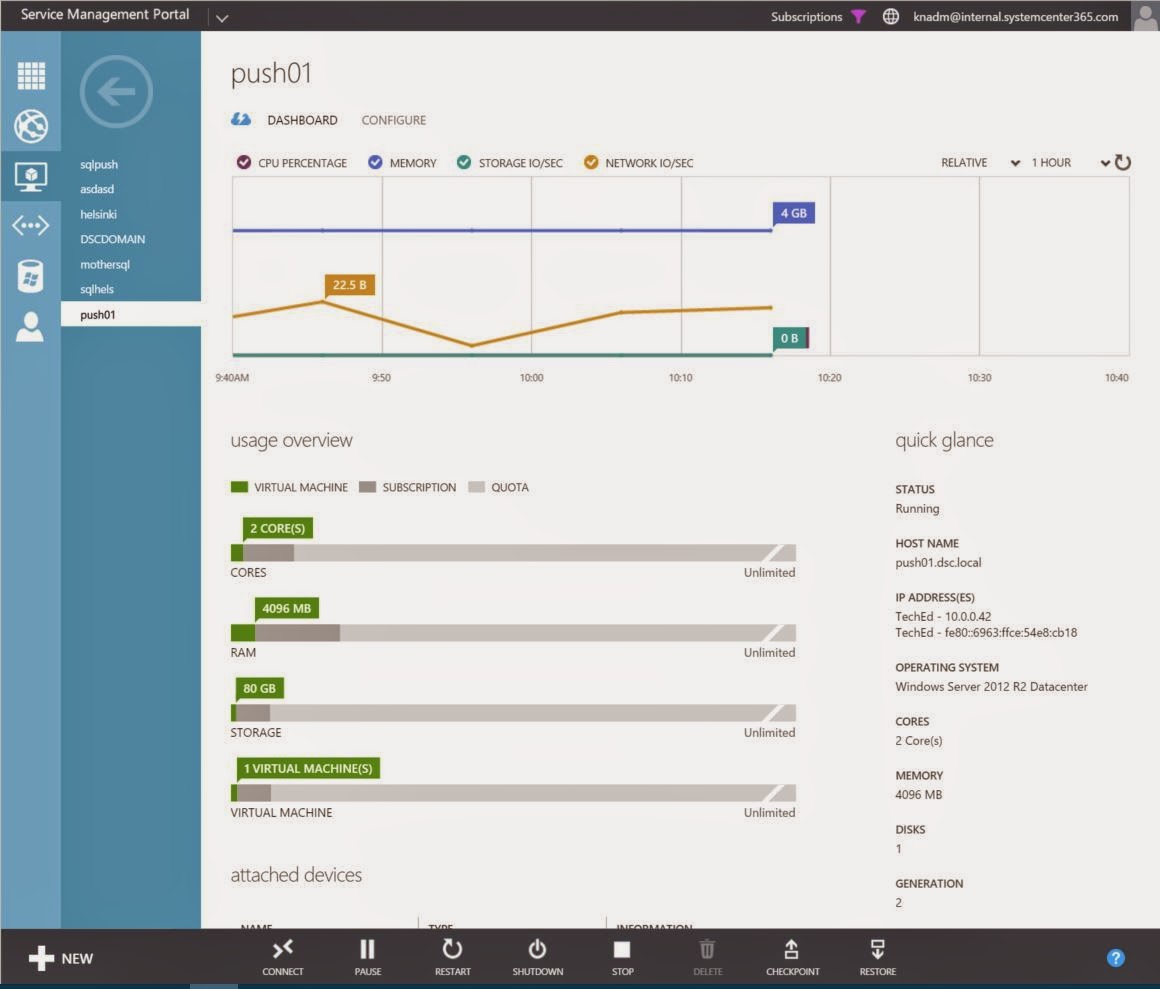

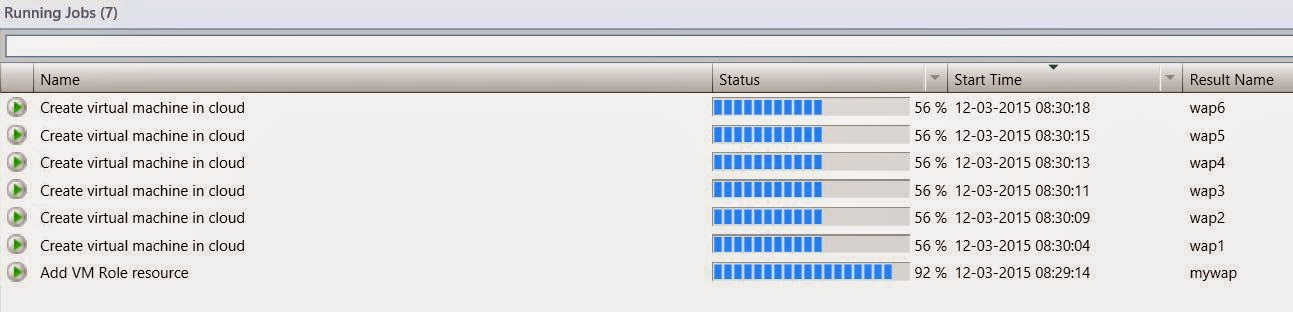

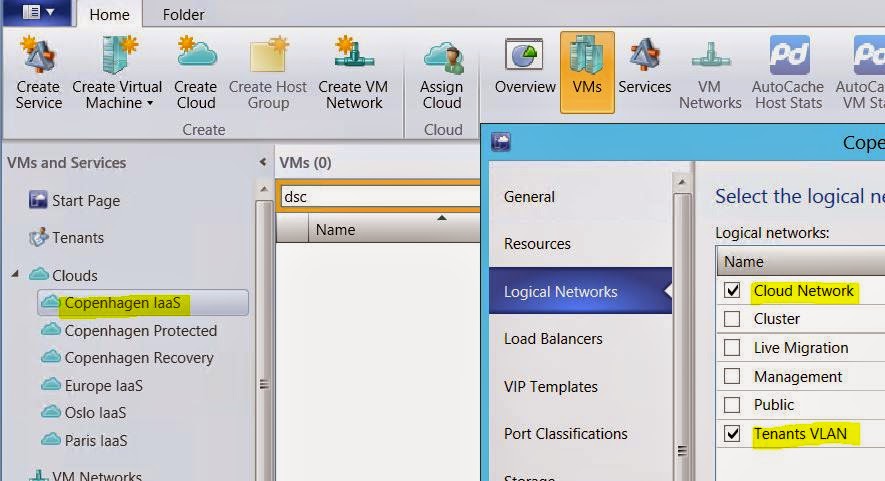

Here you can see

some screenshots from a VM Role that will deploy Windows Azure Pack on 6

stand-alone VMs, combining DSC and SMA.

In an upcoming blog

post, we will start to have a look at the actual code being used, the challenges and workarounds.

I hope that this blog post showed you some interesting things

about application modeling with VM Roles, SMA and DSC, and that the times are

a-changing compared to what we used to do in this space.