Hyper-V

Recovery Manager provided organizations using Hyper-V and System Center with

automated protection and orchestrating of accurate recovery of virtualized

workloads between private clouds, leveraging the asynchronous replication

engine in Hyper-V – Hyper – V Replica.

In other

words, no data were sent to Azure except the metadata from the VMM clouds.

This has now

changed and the service is renamed to Microsoft

Azure Site Recovery that finally let you replicate between private clouds

and public clouds (Microsoft Azure).

This

means that we are still able to utilize the automatic protection of workloads

that we are familiar with through the service, but now we can use Azure as the

target in addition to private clouds.

This

is also a door opener for migration scenarios where organizations considering

moving VMs to the cloud, can easily do this with almost no downtime using Azure

Site Recovery.

Topology

In

our environment, we will use a dedicated Hyper-V cluster with Hyper-V Replica.

This means we have added the Hyper-V Replica Broker role to the cluster. This cluster

is located in its own host group in VMM and the only host group we have added

to a cloud called “E2A”. Microsoft Azure Site Recovery requires System Center

Virtual Machine Manager, which will be responsible for the communication and

aggregation of the desired instructions made by the administrator in the Azure

portal.

Pre-reqs

-

You

must have an Azure account and add Recovery Services to your subscription

-

Certificate

(.cer) that you upload to the management portal and register to the vault. Each

vault has a single .cer certificate associated with it and it’s used when

registering VMM servers in the vault.

-

Certificate

(.pfx) that you import on each VMM server. When you install the Azure Site

Recovery Provider on the VMM server, you must use this .pfx certificate.

-

Azure

Storage account, where you will store the replicas replicated to Azure. The

storage account needs geo-replication enabled and should be in the same region

as the Azure Site Recovery service and associated with the same subscription

-

VMM

Cloud(s). A cloud must be created in VMM that contains Hyper-V hosts in a host

group enabled with Hyper-V Replica

-

Azure

Site Recovery Provider must be installed on the VMM management server(s)

In

our case, we had already implemented “Hyper-V Recovery Manager”, so we were

able to do an in-place upgrade of the ASR Provider.

-

Azure

Recovery Services agent must be installed on every Hyper-V host that will

replicate to Microsoft Azure. Make sure you install this agent on all hosts

located in the host group that you are using in your VMM cloud.

Once

we had enabled all of this in our environment, we were ready to proceed and to

the configuration of our site recovery setup.

Configuration

Login

to the Azure management portal and navigate to recovery services to get the

details around your vault, and see the instructions on how to get started.

We

will jump to “Configure cloud for protection” as the fabric in VMM is already

configured and ready to go.

The

provider installed on the VMM management server is exposing the details of our

VMM clouds to Azure, so we can easily pick “E2A” – which is the dedicated cloud

for this setup. This is where we will configure our site recovery to target

Microsoft Azure.

Click

on the cloud and configure protection settings.

On

target, select Microsoft Azure. Also note that you are able to setup protection

and recovery using another VMM Cloud or VMM management server.

For

the configuration part, we are able to specify some options when Azure is the

target.

Target: Azure. We are now replicating

from our private cloud to Microsoft Azure’s public cloud.

Storage Account: If none is present, then you

need to create a storage account before you are able to proceed. If you have

several storage accounts, then choose the accounts that are in the same region

as your recovery vault.

Encrypt stored data: This is default set to “on”,

and not possible to change in the preview.

Copy frequency: Since we are using Hyper-V 2012

R2 in our fabric – that introduced us for additional capabilities related to

copy frequencies, we can select 30 seconds, 5 minutes and 15 minutes. We will

use the “default” that is 5 minutes in this setup.

Retain recovery points: Hyper-V Replica is able to

create additional recovery points (crash consistent snapshots) so that you can

have a more flexible recovery option for your virtual workload. We don’t need

any additional recovery points for our workloads, so we will leave this to 0.

Frequency of application

consistent snapshots: If

you want app consistent snapshots (ideally for SQL servers, which will create

VSS snapshots) then you can enable this and specify it here.

Replication settings: This is set to “immediately”

which means that every time a new VM is deployed to our “E2A” cloud in VMM with

protection enabled, will automatically start the initial replication from

on-premises to Microsoft Azure. For large deployments, we would normally recommend

to schedule this.

Once

you are happy with the configuration, you can click ‘save’.

Now,

Azure Site Recovery will configure this for your VMM cloud. This means that –

through the provider, the hosts/clusters will be configured with these settings

automatically from Azure.

-

Firewall

rules used by Azure Site Recovery are configured so that ports for replication

traffic are opened

-

Certificates

required for replication are installed

-

Hyper-V

Replica Settings are configured

Cool!

You

will have a job view in Azure that shows every step during the actions you

perform. We can see that protection has been successfully enabled for our VMM

Cloud.

If

we look at the cloud in VMM, we also see that protection is enabled and

Microsoft Azure is the target.

Configuring resources

In

Azure, you have had the option to create virtualized networks for many years

now. We can of course use them in this context, to map with our VM networks

present in VMM.

To

ensure business continuity it is important that the VMs that failover to Azure

are able to be reached over the network – and that RDP is enabled within the

guest. We are mapping our management VM network to a corresponding network in

Azure.

VM Deployment

Important things to note:

In

preview, there are some requirements for using Site Recovery with your virtual

machines in the private cloud.

Only

support for Gen1 virtual machines!

This

means that the virtual machines must have their OS partition attached to an IDE

controller. The disk can be vhd or vhdx, and you can even attach data disks

that you want to replicate. Please note that Microsoft Azure does not support

VHDX format (introduced in Hyper-V 2012), but will convert the VHDX to VHD

during initial replication in Azure. In other words, virtual machines using

VHDX on premises will run on VHD’s when you failover to Azure. If you failback

to on-premises, VHDX will be used as expected.

Next,

we will deploy a new VM in VMM. When we enable protection on the hardware

profile and want to deploy to a Cloud, intelligent placement will kick in and

find the appropriate cloud that contains Hyper-V hosts/clusters that meet the

requirements for replica.

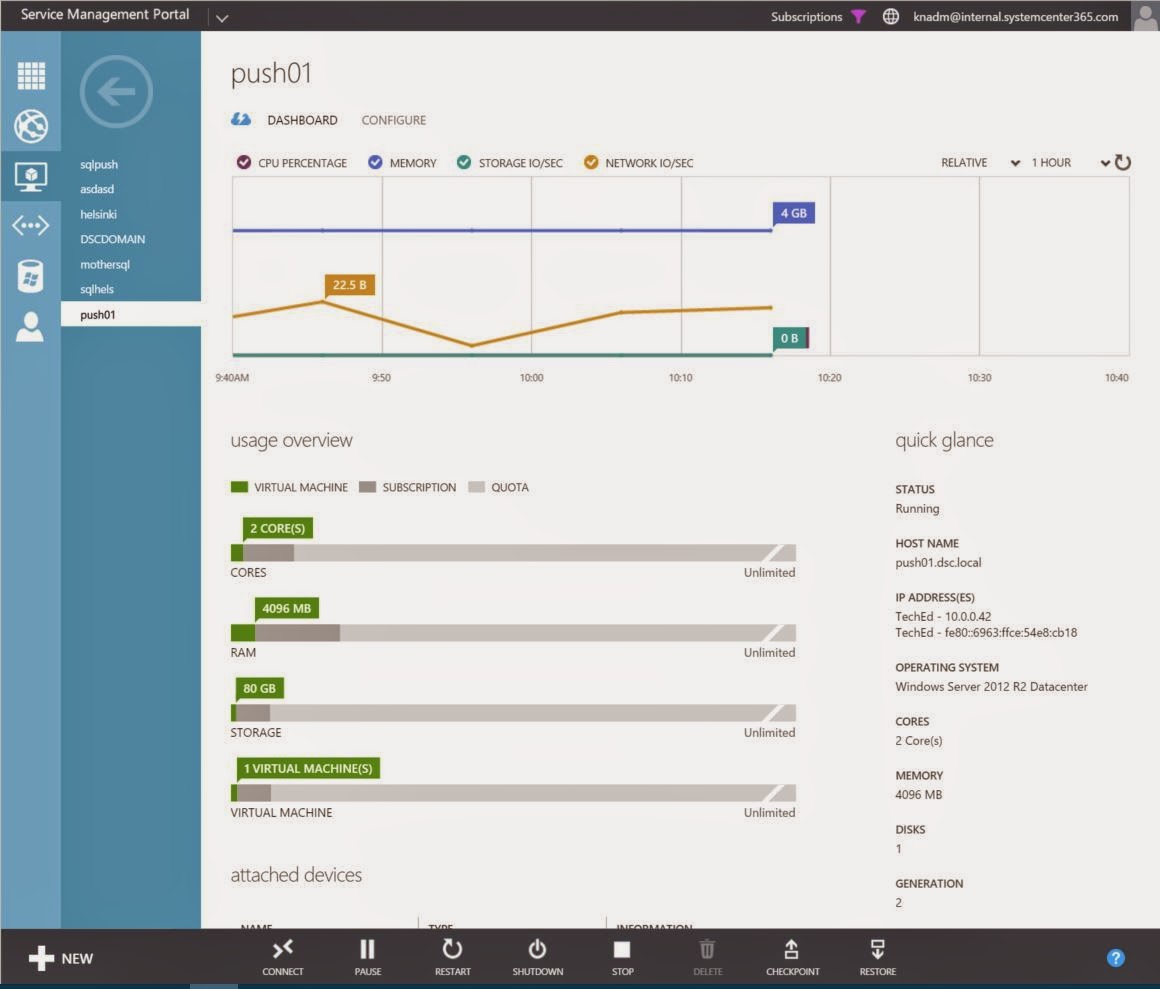

After

the deployment, the virtual machine should immediately start with an initial

replication to Microsoft Azure, as we configured this on the protection settings

for our cloud in Azure. We can see the details of the job in the portal and

monitor the process. Once it is done, we can see – at a lower level that we are

actually replicating to Microsoft Azure directly on the VM level.

After

a while (depending on available bandwidth), we have finally replicated to Azure

and the VM is protected.

Enabling protection on already

existing VMs in the VMM cloud

Also

note that you can enable this directly from Azure. If you have a virtual

machine running in the VMM cloud enabled for protection, but the VM itself is

not enabled in VMM, then Azure can pick this up and configure it directly from

the portal.

If

you prefer to achieve this by using VMM, it is easy by open the properties of

the VM and enable for protection.

One

last option is to use the VMM powershell module to enable this on many VMs at

once.

Set-SCVirtualMachine

–VM “VMName” –DRProtectionRequired $true –RecoveryPointObjective 300

Test Failover

One

of the best things with Hyper-V Replica is that complex workflows, such as test

failovers, planned failovers and unplanned failovers are integrated into the

solution. This is also exposed and made available in the Azure portal, so that

you easily can perform a test failover on your workloads. Once a VM is protected

– meaning that the VM has successfully completed the initial replication to

Azure, we can perform a test failover. This will create a copy based on the

recovery point you select and boot that virtual machine in Microsoft Azure.

Once

you are satisfied with the test, you can complete the test failover from the

portal.

This

will power off the test virtual machine and delete it from Azure. Please note

that this process will not interfere with the ongoing replication from private

cloud to Azure.

Planned failover

You

can use planned failover in Azure Site Recovery for more than just failover. Consider

a migration scenario where you actually want to move your existing on-premises

workload to Azure, planned failover will be the preferred option. This will

ensure minimal downtime during the process and start up the virtual machine in

Azure afterwards.

In

our case, we wanted to simulate planned maintenance in our private cloud, and

therefore perform a planned failover to Azure.

Click

on the virtual machine you want to failover, and click planned failover in the

portal.

Note

that if the virtual machine has not performed a test failover, we are

recommending you to do so before an actual failover.

Since

this is a test, we are ready to proceed with the planned failover.

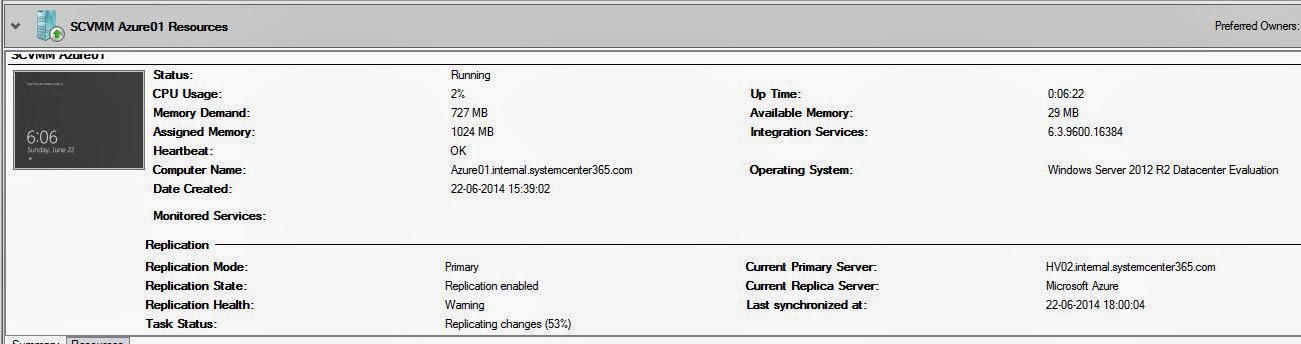

When

the job has started, we are drilling down to the lowest level again, Hyper-V

Replica, to see what’s going on. We can see that the VM is preparing for planned

failover where Azure is the target.

In

the management portal, we can see the details for the planned failover job.

Once

done, we have a running virtual machine in Microsoft Azure, that appears in the

Virtual Machine list.

If

we go back to the protected clouds in Azure, we see that our virtual machine “Azure01”

has “Microsoft Azure” as its active location.

If

we click on the VMs and drill into the details, we can see that we are able to

change the name and the size of the virtual machine in Azure.

We

have now successfully performed a planned failover from our private cloud to

Microsoft Azure!

Failback from Microsoft Azure

When

we were done with our planned maintenance in our fabric, it was time to

failback the running virtual machine in Azure to our VMM Cloud.

Click

on the virtual machine that is running in Azure that is protected, and click

planned failover.

We

have two options for the data synchronization. We can either use “Synchronize

data before failover” that will perform something similar as “re-initializing

replication” to our private cloud. This means synchronization will be performed

without shutting down the virtual machine, leading to minimal downtime during

the process.

The

other option “Synchronize data during failover only” will minimize

synchronization data but have more downtime as the shutdown will begin

immediately. Synchronization will start after shutdown to complete the

failover.

We

are aiming for minimal downtime, so option 1 is preferred.

When

the job is started, you can monitor the process in Azure portal.

Once

the sync is complete, we must complete the failover from the portal so that

this will go ahead and start the VM in our private cloud.

Checking

Hyper-V Replica again, we can see that the state is set to “failback in

progress” and that we currently have no primary server.

The

job has now completed all the required steps in Azure.

Moving

back to Hyper-V Replica, we can see that the VM is again replicating to

Microsoft Azure, and that the primary server is one of our Hyper-V nodes.

In

VMM, our virtual machine “Azure01” is running again in the “E2A” cloud

In

the Azure management portal in the virtual machines list, our VM is still

present but stopped.

Thanks

for joining us on this guided tour on how to work with Azure Site Recovery.

Next

time we will explore the scenarios we can achieve by using recovery plans in

Azure Site Recovery, to streamline failover of multi-tier applications, LOB

applications and much more.