This is the first blog post I am writing this year.

I was planning to publish this before Christmas, but I

figured out it would be better to wait and reflect even more about the trends

that’s currently taking place in this industry.

So what’s a better way to start the New Year other than

with something I really think will be one of the big bets for the coming

year(s)?

I drink a lot of coffee. In fact, I might suspect it will

kill me someday. On a positive note, at least I was the one who was controlling

it. Jokes aside, I like to drink coffee when I'm thinking out loud around technologies and

potentially reflect on the steps we’ve made so far.

Going back to 2009-10 when I was entering the world of virtualization

with Windows Server 2008 R2 and Hyper-V, I couldn’t possible imagine how things

would change in the future.

At this very day, I realized that the things we were

doing back then, was just the foundation to what we are seeing today.

The same arguments are being used throughout the

different layers of the stack.

We need to optimize our resources, increase density, flexibility

and provide fault-tolerant, resilient and highly-available solutions to bring

our business forward.

That was the approach back then – and that’s also the

approach right now.

We have constantly been focusing on the infrastructure

layer, trying to solve whatever issues that might occur. We have been in the belief

that if we actually put our effort into the infrastructure layer, then the

applications we put on top of that will be smiling from ear to ear.

But things change.

The infrastructure change, and the applications are

changing.

Azure made its debut in 2007-08 I remember. Back then it

was all about Platform as a Service offerings.

The offerings were a bit limited back then, giving us

cloud services (web role – and worker role), caching and messaging systems such

as Service Bus, together with SQL and other storage options such as blob, table

and queue.

Many organizations were really struggling back then to

get a good grasp of this approach. It was complex. It was a new way of

developing and delivering services, and in almost all cases, the application

had to be rewritten to fully functional using the PaaS components in Azure.

People were just getting used to virtual machines and has

started to use them frequently also a part of test and development of new

applications. Many customers went deep into virtualization in production as

well, and the result was a great demand from customers for having the

opportunity to host virtual machines in Azure too.

This would simplify any migration of “legacy”

applications to the cloud, and more or less solve the well-known challenges we

were aware of back then.

During the summer in 2011 (if my memory serves me well),

Microsoft announced their support of Infrastructure as a Service in Azure. Finally

they were able to hit the high note!

Now what?

An increased consumption of Azure was the natural result,

and the cloud came a bit closer to most of the customers out there. Finally there

was a service model that people could really understand. They were used to

virtual machines. The only difference now was the runtime environment, which

was now hosted in Azure datacenters instead of their own. At the same time, the

PaaS offerings in Azure had evolved and grown to become even more

sophisticated.

It is common knowledge now, and it was common knowledge

back then that PaaS was the optimal service model for applications living in

the cloud, compared to IaaS.

By the end of the

day, each and every developer and business around the globe would prefer to

host and provide their applications to customers as SaaS instead of anything

else, such as traditional client/server applications.

So where are we

now?

You probably might wonder where the heck I am going with this?

And trust me, I also wondered at some point. I had to get

another cup of coffee before I was able to do a further breakdown.

Looking at Microsoft Azure and the services we have

there, it is clear to me that the ideal goal for the IaaS platform is to get as

near as possible to the PaaS components in regards to scalability, flexibility,

automation, resiliency, self-healing and much more.

For those who have been deep into Azure with Azure

Resource Manager know that there’s some really huge opportunities now to

leverage the actual platform to deliver IaaS that you ideally don’t have to

touch.

With features such as VM Scale Sets (preview), Azure

Container Service (also preview), and a growing list of extensions to use together with your compute resources, you can

potentially instantiate a state-of-the-art infrastructure hosted in Azure,

without having to touch the infrastructure (of course you can’t touch Azure

infrastructure, but I am now talking about the virtual infrastructure itself,

the one you are basically responsible of).

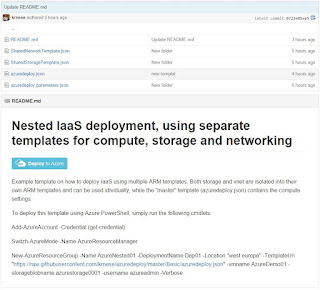

The IaaS building blocks in Azure is separated in a way

so that you can look at them as individual scale-units. Compute, Storage and

Networking are all combined to bring you virtual machines. Having this approach

with having the loosely coupled, we can also see that these building blocks are

empowering many of the PaaS components in Azure itself that lives upon the

IaaS.

The following graphic shows how the architecture is

layered.

Once Microsoft Azure Stack becomes available on-prem, we

will have one consistent platform that brings the same capabilities to your own

datacenter as you can use in Azure already.

Starting at the bottom, IaaS is on the left side while

PaaS is on the right hand side.

By climbing up, you can see that both Azure Stack and

Azure Public cloud – which will be consistent has the same approach. VMs and VM

Scale sets covers both IaaS and PaaS, but VM Scale Sets is place more on the

right hand side than VMs. This is because VM Scale Sets is considered as the

powering backbone from the other PaaS services on top of it.

Also VM Extensions leans more to the right as it gives us

the opportunity to do more than traditional IaaS. We can extend our virtual

machines to perform advanced in-guest operations when using extensions, so

anything from provisioning of complex applications, configuration management and

more can be handled automatically by the Azure platform.

On the left hand side on top of VM Extensions, we will

find Cluster orchestration such as SCALR, RightScale, Mesos and Swarm. Again dealing

with a lot of infrastructure, but also providing orchestration on top of it.

Batch is a service that is powered by Azure compute and

is a compute job scheduling service that will start a pool of virtual machines

for you, installing applications and staging data, running jobs with as many

tasks as you have.

Going further to the right, we are seeing two very

interesting things – which also is the main driver for the entire blog post.

Containers and Service Fabric is leaning more to the PaaS side, and it is not

by coincident that Service Fabric is at the right hand side of containers.

Let us try to do a breakdown of containers and Service

Fabric

Comparing

Containers and Service Fabric

Right now in Azure, we have a new preview service that I encourage

everyone who’s interesting in container technology to look into. The ACS

Resource Provider provides you basically with a very efficient and low-cost

solution to instantiate a complete container environment using a single Azure

Resource Manager API call to the underlying resource provider. After completion

of the deployment, you will be surprised to find 23 resources within a single

resource groups containing all the components you need to have a complete

container environment up and running.

One important thing to note at this point is that ACS is

Linux first and containers first, in comparison to Service Fabric – which is

Windows first and also microservices first rather to container first.

At this time it is ok to be confused. And perhaps this is

a good time for me to explain the difficulties to put this on paper.

I am now consuming the third cup of coffee.

Azure explains it

all

Let us take some steps back to get some more context into

the discussion we are entering.

If you want to keep up with everything that comes in

Azure nowadays, that is more or less a full-time job. The rapid pace of

innovation, releases and new features is next to crazy.

Have you ever wondered how the engineering teams are able

to ship solutions this fast – also with this level of quality?

Many of the services we are using today in Azure is

actually running on Service Fabric as

Microservices. This is a new way of doing development and is also the true

implementation of DevOps, both as a culture and also from a tooling point of

view.

Meeting customer expectations isn’t easy. But it is

possible when you have a platform that supports and enables it.

As I stated earlier in this blog post, the end goal for

any developer would be to deliver their solutions using the SaaS service model.

That is the desired model which implies continuous

delivery, automation through DevOps, adoption of automatable, elastic and

scalable microservices.

Wait a moment. What

exactly is Service Fabric?

Service Fabric provides the complete runtime management

for microservices and is dealing with the things we have been fighting against

for decades. Out-of-the box, we get hyper scale, partitioning, rolling

upgrades, rollbacks, health monitoring, load balancing, failover and

replication. All of these capabilities is built-in so we can focus on building

those applications we want to be scalable, reliable, consistent and available

microservices.

Service Fabric provides a model so you can wrap together

the code for a collection of related microservices and their related

configuration manifests to an application package. The package is then deployed

to a Service Fabric Cluster (this is actually a cluster that runs on one as

much as many thousands Windows virtual machines – yes, hyper scale). We have

two defined programming models in Service Fabric, which is ‘Reliable Actor’ and

‘Reliable Service’. Both of these models provides you with – and makes it

possible to write both stateless and stateful applications. This is breaking

news.

You can go ahead and create and develop stateless

applications in more or less the same way you have been doing for years, trusting

to externalize the state to some queuing system or some other data store, but

again handling the complexity of having a distributed application at scale.

Personally I think the stateful approach in Service Fabric is what make this so

exciting. Being able to write stateful applications that is constantly

available, having a primary/replica relationship with its members is very

tempting. We are trusting the Service Fabric itself to deal with all the

complexity we have been trying to enable in the Infrastructure layer for years,

at the same time as the stateful microservices keep the logic and data close so

we don’t need queues and caches.

Ok, but what about

the container stuff you mentioned?

So Service Fabric provides everything out of the box. You

can think of it as a complete way to handle everything from beginning to the

end, including a defined programming model that even brings an easy way of

handling stateful applications.

ACS on the other side provides a core infrastructure

which provides significant flexibility but this brings a cost when trying to implement stateful services. However,

the applications themselves are more portable since we can run them wherever

Docker containers can run, while microservices on Service Fabric can only run

on Service Fabric.

The focus for ACS right now is around open source

technologies that can be taken in whole or in part. The orchestration layer and

also the application layer brings a great level of portability as a result of

that, where you can leverage open source components and deploy them wherever

you want.

In the end of the day, Service Fabric has a more restrictive

nature but also gives you a more rapid development experience, while ACS

provides the most flexibility.

So what exactly is

the comparison of Containers and microservices with Service Fabric at this

point?

What they indeed do have in common is that this is

another layer of abstraction in addition to the things we are already dealing

with. Forget what you know about virtual machines for a moment. Containers and

microservices is exactly what engineers and developers are demanding to unlock

new business scenarios, especially in a time where IoT, Big Data, insight and analytics

is becoming more and more important for businesses world wide. The cloud itself

is the foundation that enables all of this, but having the great flexibility

that both container – and service fabric provides is really speeding up the

innovation we’re seeing.

For organizations that has truly been able to adopt the

DevOps mindset, they are harnessing that investment and is capable of shipping

quality code at a much more frequent cadence than ever before.

Coffee number 4

and closing notes

First I want to thank you for spending these minutes

reading my thoughts around Azure, containers, microservices, Service Fabric and

where we’re heading.

2016 is a very exciting year and things are changing very

fast in this industry. We are seeing customers who are making big bets in

certain areas, while others are taking a potential risk of not making any bets

at all. I know at least from my point of view what’s the important focus moving

forward. And I will do my best to guide people on my way.

While writing these closing notes, I can only use the

opportunity to point to the tenderloin in this blog post:

My background is all about ensuring that the

Infrastructure is providing whatever the applications need.

That skillset is far from obsolete, however, I know that

the true value belongs to the upper layers.

We are hopefully now realizing that even the

infrastructure that we have been ever so careful about is turning into

commodity, and now handled more through an ‘infrastructure as code’ approach

than ever before, trusting that it works, empowers the PaaS components – that again

brings the world forward while powering SaaS applications.

Container technologies and Microservices as part of

Service Fabric is taking that for granted, and from now on, I am doing the

same.