Configuring Remote Console for Windows Azure Pack

This is a blog post that is part of my Windows Azure Pack

findings.

Lately, I have been very dirty on my hands, trying to

break, fix and stress test Windows Azure Pack and its resource providers.

Today, I will explain how we configure Remote Desktop as

part of our VM Cloud resource provider, to give console access to the virtual

machines running on a multi-tenant infrastructure.

Background

Windows Server 2012 R2 – Hyper-V introduced us for many

new innovations, and a thing called “Ehanced VM session mode”, or “RDP via

VMBus” was a feature that no one really cared about at first.

To put it simple: The traditional VMConnect session you

initiate when connecting to a virtual machine (on port 2179 to the host, that

then exposes the virtual machine) now supports redirecting local resources to a

virtual machine session. This has not been possible before, unless you are

going through a TCP/IP RDP connection directly to the guest – that indeed

required network access to the guest.

Hyper-V’s architecture has something called “VMBus” which

is a communication mechanism (high-speed memory) used for interpatition

communication and device enumeration on systems with multiple active

virtualized partitions. If you do not install the Hyper-V role, the VMBus is

not used for anything. But when Hyper-V is installed, the VMBus are responsible

for communication between parent/child with the Integration Services installed.

The virtual machines (guests/child partitions) do not

have direct access to the physical hardware on the host. They are only

presented with virtual views (synthetic devices). The synthetic devices take

advantages when Integration Services is installed for storage, networking,

graphics, and input system. The Integration Services is a very special

virtualization aware implementation, which utilizes the VMBus directly, and

bypasses any device emulation layer.

In other words:

The enhanced session mode connection uses a Remote

Desktop Connection session via the VMBus, so no network connection to the

virtual machine is required.

What problems

does this really solve?

·

Hyper-V Manager let you connect

to the VM without any network connectivity, and copy files between the host and

VM.

·

Using USB with the virtual

machine

·

Printing from a virtual machine

to a local printer

·

Take advantage of all of the

above, without any network connectivity

·

Deliver 100% IaaS to customers/tenants

The last point is important.

If you look at the service models in the cloud

computing definition, Infrastructure as a Service will give the tenants the

opportunity to deploy virtual machines, virtual storage and virtual networks.

In other words, all of the fabric content is managed by

the service provider (Networking, Storage, Hypervisor) and the tenants simply

get an operating system within a virtual machine.

Now, to truly deliver that, through the power of

self-service, without any interaction

from the service provider, we must also support that the tenants can do

whatever they want with this particular virtual machine.

A part of the operating system is also the networking

stack. (Remember that abstraction is key here, so the tenant should also manage

– and be responsible for networking within their virtual machines, not only

their applications). So to let tenants have full access to their virtual

machines, without any network dependencies, Remote Desktop via VMBus is the

solution.

Ok, so now you know where we’re heading, and will use

RDP via VMBus together with System Center 2012 R2 and Windows Azure Pack. This

feature is referred to as “Remote Console” in this context, and provides the

tenants with the ability to access the console of their virtual machines in

scenarios where other remote tools (or RDP) are unavailable. Tenants can use

Remote Console to access virtual machines when the virtual machine is on an

isolated network, an untrusted network, or across the internet.

Requirements

Windows Server 2012 R2 – Hyper-V

System Center 2012 R2 – Virtual Machine Manager

System Center 2012 R2 – Service Provider Foundation

(which was introduced in SP1)

Windows Azure Pack

Remote Desktop Gateway

The Remote Desktop Gateway in this context will act

(almost similar) like it does for the VDI solution, signing connections from

MSTSC ro the gateway, but rather redirect to VMBus and not a VDI guest.

After you have installed, configured and deployed the

fabric, you can add the Remote Desktop Gateway to your VM Cloud resource

provider. You can either add this in the same operation as when you add your

VMM server(s), or do it afterwards. (This requires that you have installed a VM

with the RDGateway role, configured SSL certificates, both for VMMàHost->RDGW communication, and CA cert for external access).

Before we start to explain about the required

configuration steps, I would like to mention some important things.

This has been a valuable learning experience, and I

have been collaborated with Marc Van Eijk (Azure MVP), Richard Rundle (PM at

MS), Stanislav Zhelyazkov (Cloud MVP), and last but not least, Flemming Riis

(Cloud MVP).

Thanks for all the input and valuable discussions,

guys!

As part of this journey, I have been struggling with

certificates to get everything up and running. As you may be aware of, I am not

a PKI master, and I am not planning to become one either, but it is nice to

have a clear understanding of the requirements in this setup.

1)

The certificate you need for

your VMM server(s), Hyper-V hosts (that is a part of a host group that is in a

VMM cloud, that is further exposed through SPF to a Plan in WAP) and the RD

Gateway can be self-signed. I bet

many will try to configure this with self-signed certificates in their lab, and

feel free to do so. But you must configure it properly. I’ve been burned here.

Many times.

2) The certificate you need to access this remotely should be from a CA. If you want to

demonstrate or use this in a real world deployment, this is an absolute

requirement. This certificate is then only needed on the RD Gateway, and should

represent the public FQDN on the RD Gateway that is accessible on port 443 from

the outside.

3) I suggest you repeat step 1 and 2 before you proceed.

4)

I also suggest you to get your

hands on a trusted certificate so that you don’t have to stress with the

Hyper-V host configuration, as described later in this guide

Configuring

certificates on VMM

If you are using self-signed certificates, you should

start by creating a self-signed certificate that meets the requirement for this

scenario.

1)

The certificate must not be

expired

2) The Key Usage field must contain a digital signature

3) The Enhanced Key Usage field must contain the following Client

Authentication object identifier: (1.3.6.1.5.5.7.3.2)

4) The root certificate for the certification authority (CA) that

issued the certificate must be installed in the Trusted Root Certification

Authorities certificate store

5)

The cryptographic service

provider for the certificate must support SHA256

You can download makecert, and run the following

cmdlet to create a working certificate:

makecert -n "CN=Remote Console Connect" -r

-pe -a sha256 -e <mm/dd/yyyy> -len 2048 -sky signature -eku

1.3.6.1.5.5.7.3.2 -ss My -sy 24 "remoteconsole.cer"

Once this is done, open MMC and add the certificate snap-in

and connect to local user.

Under personal, you will find these certificates.

1)

Export the certificate (.cer) to

a folder.

2)

Export the private key (.pfx) to

a folder – and create a password

For the VMM server, we load the pfx into the VMM database so

that VMM doesn’t need to rely on the certs being in the cert store of each

node. You shouldn’t need to do anything on the VMM server except import the pfx

into the VMM database using Set-SCVMMServer cmdlet. The VMM server is

responsible for creating tokens.

Now, open VMM and launch the VMM Powershell module,

and execute these cmdlets, since we also must import the PFX to the VMM

database:

$mypwd = ConvertTo-SecureString "password" -AsPlainText

-Force

$cert = Get-ChildItem .\RemoteConsoleConnect.pfx

$VMMServer = VMMServer01.Contoso.com

Set-SCVMMServer -VMConnectGatewayCertificatePassword $mypwd

-VMConnectGatewayCertificatePath $cert -VMConnectHostIdentificationMode FQDN

-VMConnectHyperVCertificatePassword $mypwd -VMConnectHyperVCertificatePath

$cert -VMConnectTimeToLiveInMinutes 2 -VMMServer $VMMServer

This will import the pfx, and configure VMM to setup

the VMConnectGateway password, certificate, the host identification mode (which

is FQDN) and the time to live in minutes.

Once this is done, you can either wait for VMM to

refresh the Hyper-V hosts in each host group – to deploy the certificates, or

trigger this manually through powershell with this cmdlet:

Get-SCVMHost -VMMServer

"VMMServer01.Contoso.com" | Read-SCVMHost

Once each

host is refreshed in VMM, it installs the certificate in the Personal certificate store of the

Hyper-V hosts and configure the Hyper-V host to validate tokens by using the

certificate.

The

downside of using a self-signed certificate in this setup, is that we have to

do some manual actions on the hosts afterwards:

Configuring certificates on the Hyper-V hosts

Hyper-V will

accept tokens that are signed by using specific certificates and hash

algorithms. VMM performs the required configuration for the Hyper-V hosts.

Since using a

self-signed certificate, we must import the public key (not the private key) of the certificate to the Trusted

Root Certificateion Authorities certificate store for the Hyper-V hosts. The following

script will perform this for you:

Import-Certificate -CertStoreLocation cert:\LocalMachine\Root -Filepath

"<certificate path>.cer"

You must restart the Hyper-V Virtual

Machine Management service if you install a certificate after you configure

Virtual Machine Manager. (If you have running virtual machines on the hosts,

put one host at a time in maintenance mode with VMM, wait till it is empty,

reboot, and perform the same action on every other hosts before you proceed.

Yes, we are getting punished for using self-signed certificates here).

Please

note:

This

part, where the Hyper-V Virtual Machine Management Service requires a restart,

is very critical. If remote console is not working at all, then it could have

been due to the timing of when the self-signed certificate was added to the trusted

root on the Hyper-V hosts. If the certificate is added to the trusted root

after VMM has pushed the certificate, Hyper-V won’t recognize the self-signed

cert as trusted since it queries the cert store on process startup, and not for

each token it issues.

Now we need to verify that the certificate

is really installed in the Personal certificate store of the Hyper-V hosts,

using the following cmdlet:

dir cert:\localmachine\My\ |

Where-Object { $_.subject -eq "CN=Remote Console Connect" }

Also, we must check the hash configuration for the

trusted issuer certificate by running this cmdlet:

$Server = “nameofyourFQDNHost”

$TSData =

Get-WmiObject -computername $Server -NameSpace

"root\virtualization\v2" -Class

"Msvm_TerminalServiceSettingData"

$TSData

Great, we are now done with both VMM and our Hyper-V

hosts.

Configuring

certificates on the Remote Desktop Gateway

This Remote Desktop Gateway can only be used for Remote Console once it is configured for this. A

configuration change will occur, which makes the gateway unusable for other

purposes, as we will install an authentication plug-in from VMM media to this

server.

In order to support federated authentication, VMM has a

VMM Console Connect Gateway which is located at CDLayout.EVAL\amd64\Setup\msi\RDGatewayFedAuth.

For a HA scenario, you can install multiple quantities of

RD Gateways with the Console Connect Gateway behind a load balancer.

Once you have installed and configured the RD Gateway

with a trusted certificate from a CA for the front-end part (the public FQDN

that is added to the VM Cloud resource provider in WAP), you can move forward

and import the public key of the

certificate into the Personal certificate store on each RD Gateway server,

using the following cmdlet:

C:\>

Import-Certificate -CertStoreLocation cert:\LocalMachine\My -Filepath

"<certificate path>.cer"

Since we are using a self-signed certificate in this

setup, we must do the same for the trusted root certification authorities

certificate store for the machine account with the following cmdlet:

C:\> Import-Certificate -CertStoreLocation

cert:\LocalMachine\Root -Filepath "<certificate path>.cer"

When the RD Gateway is authenticating tokens, it accepts

only tokens that are signed by using specific certificates and hash algorithms.

This configuration is performed by setting the TrustedIssuerCertificateHashes

and the AllowedHashAlgorithms properties in the WMI FedAuthSettings class

Use the following cmdlet to set the

TrustedIssuerCertificateHashes property:

$Server = “rdconnect.internal.systemcenter365.com”

$Thumbprint = “thumbrpint

of your certificate”

$Tsdata =

Get-WmiObject –computername $Server –NameSpace “root\TSGatewayFedAuth2” –Class “FedauthSettings”

$TSData.TrustedIssuerCertificates

= $Thumbprint

$TSData.Put()

Now, make

sure that the RD Gateway is configured to use the Console Connect Gateway (VMM

plug-in) for authentication and authorization, by running the following cmdlet:

C:\>

Get-WmiObject -Namespace root\CIMV2\TerminalServices -Class

Win32_TSGatewayServerSettings

Next, we must make sure that the certificate has been

installed in the personal certificate store for the machine account, by running

the following command:

Dir cert:\localmachine\My\

| where-Object { $_.subject –eq “CN=Remote Console

Connect” }

And last, check the

configuration of the Console Connect Gateway, by running this cmdlet:

Get-WmiObject –computername $Server –NameSpace “root\TSGatewayFedAuth2”

–Class “FedAuthSettings”

s

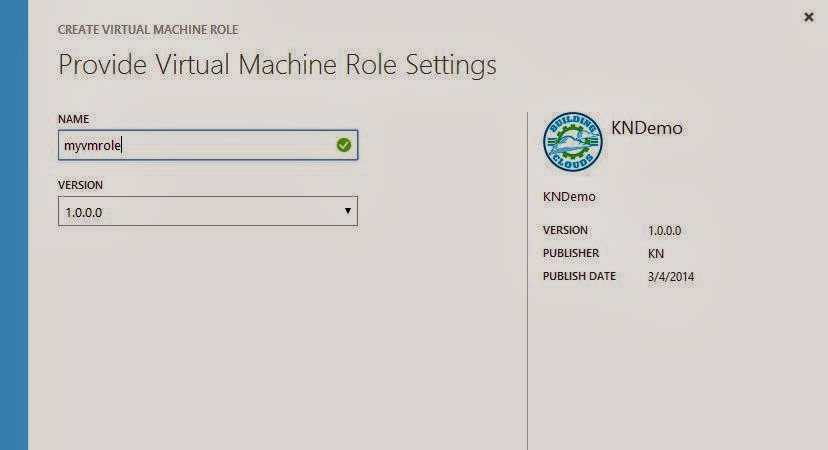

Now, if you

have added your RD Gateway to Windows Azure Pack, you can deploy virtual

machines after subscribing to a plan, and test the Remote Console Connect

feature.