Business Continuity for the management stamp

I’ve been working with many service providers over the

last months where both Azure Pack and Azure Site Recovery has been critical

components.

However, looking at the relatively big footprint with the

DR add-on in Update Rollup 4 for Windows Azure Pack, organizations has started

in the other end in order to bring business continuity to their clouds.

For one of the larger service providers, we had to dive

deep into the architecture of Hyper-V Replica, SCVMM and Azure Site Recovery

before we knew how to design the optimal layout to ensure business continuity.

In each and every ASR design, you must look at your

fabric and management stamp and start looking at the recovery design before you create the disaster design. Did I lost

you there?

What I’m saying is that it’s relatively easy to perform

the heavy lifting of the data, but once the shit hit the fans, you better know

what to expect.

In this particular case, we had a common goal:

We want to ensure

business continuity for the entire management stamp with a single click, so

that tenants can create, manage and operate their workloads without

interruption. This should be achieved in an efficient way with a minimal

footprint.

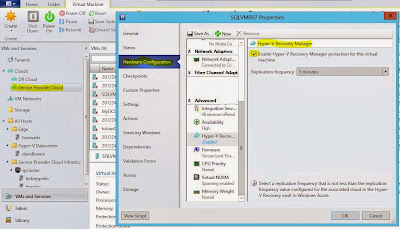

When we first saw the release of Azure Site Recovery, it

was called “Hyper-V Recovery Manager” and required two SCVMM management stamps

to perform DR between sites. The feedback from potential customers were quite

loud and clear: people wanted to leverage their existing SCVMM investment and

perform DR operations with a single SCVMM management stamp. Microsoft listened

and let us now perform DR between SCVMM Clouds, using the same SCVMM server.

So IMHO, using a single SCVMM stamp is always preferred

whenever it is possible, so that was also my recommendations when it came to

the initial design for this case.

In this blog post, I will share my findings and

workaround for making this possible, ensuring business continuity for the

entire management stamp.

The initial configuration

The first step we had to make when designing the

management stamp, was to plan and prepare for SQL AlwaysOn Availability Groups.

System Center 2012 R2 – Virtual Machine Manager, Service

Manager, Operations Manager and Orchestrator does all support AlwaysOn

Availability Groups.

Why plan for SQL

AlwaysOn Availability Groups when we have the traditional SQL Cluster solution

available for High-Availability?

This is a really good question – and also very important

as this is the key for realizing the big goal here. AlwaysOn is a

high-availability and disaster recovery solution that provides an

enterprise-level alternative to database mirroring. The solution maximizes the

availability of a set of user databases and supports a failover environment for

those selected databases.

Compared to a traditional SQL Cluster – that can also use

shared VHDXs, this was a no brainer. A shared VHDX would have given us a

headache and increased the complexity with Hyper-V Replica.

SQL AlwaysOn Availability Groups let us use local storage

for each VM within the cluster configuration, and enable synchronous replication

between the nodes on the selected user databases.

Alright, the SQL discussion is now over, and we proceeded

to the fabric design.

In total, we would have several Hyper-V Clusters for

different kind of workload, such as:

·

Management

·

Edge

·

IaaS

·

DR

Since this was a Greenfield project, we had to deploy

everything from scratch.

We started with the Hyper-V Management Cluster and from

there we deployed two VM instances in a guest cluster configuration, installed

with SQL Server for Always On Availability Groups. Our plan was to put the

System Center databases – as well as WAP databases onto this database cluster.

Once we had deployed a Highly-Available SCVMM solution,

including a HA library server, we performed the initial configuration on the

management cluster nodes.

As stated earlier, this is really a chicken and egg

scenario. Since we are working with a cluster here, it’s straightforward to

configure the nodes – one at a time, putting one node in maintenance mode, move

the workload and repeat the process on the remaining node(s). Our desired state

configuration at this point is to deploy the logical switch with its profile

settings to all nodes, and later provision more

storage and define classifications within the fabric.

The description here is relatively high-level, but to summarize:

we do the normal fabric stuff in VMM at this point, and prepare the

infrastructure to deploy and configure the remaining hosts and clusters.

For more

information around the details about the design, I used the following script

that I have made available that turns SCVMM into a fabric controller for

Windows Azure Pack and Azure Site Recovery integration:

Once the initial configuration was done, we deployed the

NVGRE gateway hosts, DR hosts, Iaas hosts, Windows Azure Pack and the remaining

System Center components in order to provide service offerings through the

tenant portal.

If you are very keen to know more about this process, I

recommend to read our whitepaper which covers this end-to-end:

Here’s an overview of the design after the initial

configuration:

If we look at this from a different – and perhaps a more

traditional perspective, mapping the different layers with each other, we have

the following architecture and design of SCVMM, Windows Azure Pack, SPF and our

host groups:

So far so good. The design of the stamp was finished and

we were ready to proceed with the Azure Site Recovery implementation

Integrating Azure Site Recovery

To be honest, at this point we thought the hardest part

of the job was done, such as ensuring HA for all the workloads as well as

integrating NVGRE to the environment, spinning up complex VM roles just to

improve the tenants and so on and so forth.

We added ASR to the solution and was quite confident that

this would work as a charm since we had SQL AlwaysOn as part of the solution.

We soon found out that we had to do some engineering

before we could celebrate.

Here’s a description of the issue we encountered.

In the Microsoft Azure portal, you configure ASR and

perform the mapping between your management servers and clouds and also the VM

networks.

As I described earlier in this blog post, the initial

design of Azure Site Recovery in an “Enterprise 2 Enterprise” (on-prem 2

on-prem) scenario, was to leverage two SCVMM management servers. Then the

administrator had the opportunity to duplicate the network artifacts (network

sites, VLAN, IP pools etc) across sites, ensuring that each VM could be brought

online on the secondary site with the same IP configuration as on the primary

site.

Sounds quite obvious and really something you would

expect, yeah?

Moving away from that design and rather use a single

SCVMM management server (a single management

server, that is highly-available is

not the same as two SCVMM management servers), gave us some challenges.

1) We

could (of course) not create the same networking artifacts twice within a

single SCVMM management server

2) We

could not create an empty logical network and map the primary network with this

one. This would throw an error

3) We

could not use the primary network as our secondary as well, as this would give

the VMs a new IP address from the IP

pool

4) Although

we could update IP addresses in DNS,

the customer required to use the exact IP configuration on the secondary site

post failover

Ok, what do we do now?

At that time it felt a bit awkward to say that we were

struggling to keep the same IP configuration across sites.

After a few more cups of coffee, it was time to dive into

the recovery plans in ASR to look for new opportunities.

A recovery plan groups virtual machines together for the

purposes of failover and recovery, and it specifies the order in which groups

of VMs should fail over. We were going to create several recovery plans, so

that we could easily and logically group different kind of workloads together

and perform DR in a trusted way

Here’s how the recovery plan for the entire

stamp looks like:

So this recovery plan would power off the VMs in a

specific order, perform the failover to the secondary site and then power on

the VMs again in a certain order specified by the administrator.

What was interesting for us to see, was that we could

leverage our Powershell skills as part of these steps.

Each step can have an associated script and a manual task

assigned.

We found out that the first thing we had to do before

even shutting down the VMs, was to run a powershell script that would verify

that the VMs would be connected to the proper virtual switch in Hyper-V.

Ok, but why?

Another good question. Let me explain.

Once you are replicating a virtual machine using Hyper-V

Replica, you have the option to assign an alternative IP address to the replica

VM. This is very interesting when you have different networks across your sites

so that the VMs can be online and available immediately after a failover.

In this specific customer case, the VLAN(s) were

stretched and made available on the secondary site as well, hence the

requirement to keep the exact network configuration. In addition, all of the

VMs had assigned static IP addresses from the SCVMM IP Pools.

However, since we didn’t do any mapping at the end in the

portal, just to avoid the errors and the wrong outcome, we decided to handle

this with powershell.

When enabling replication on a virtual machine in this

environment, and not mapping to a specific VM network, the replica VM would

have the following configuration:

As you can see, we are connected to a certain switch, but

the “Failover TCP/IP” checkbox was enabled with no info. You probably know what

this means? Yes, the VM will come up with an APIPA configuration. No good.

What we did

We created a powershell script that:

a) Detected

the active Replica hosts before failover (using the Hyper-V Powershell API)

b) Ensured

that the VM(s) were connected to the right virtual switch on Hyper-V (using the

Hyper-V Powershell API)

c) Disabled

the Failover TCP/IP settings on every VM

a. Of

all of the above were successful, the recovery plan could continue to perform

the failover

b. If

any of the above were failing, the recovery plan was aborted

For this to work, you have to ensure that the following

pre-reqs are met:

·

Ensure that you have at least one

library server in your SCVMM deployment

·

If you have a HA SCVMM server

deployment as we had, you also have a remote library share (example:

\\fileserver.domain.local\libraryshare

). This is where you store your powershell script (nameofscript.ps1) Then you must configure the share as follow:

a.

Open the Registry editor

b.

Navigate to HKEY_LOCAL_MACHINE_SOFTWARE\Microsoft\Microsoft System Center Virtual

Machine Manager Server\DRAdaper/Registration

c.

Edit the value ScriptLibraryPath

e.

Provide permission to the share

location

This

registry setting will replicate across your SCVMM nodes, so you only have to do

this once.

Once the script has been placed in the library and the

registry changes are implemented, you can associate the script with one or more

tasks within a recovery plan as showed below.

Performing the recovery plan(s) now would ensure that every VM that was

part of the plan, was brought up at the recovery site with the same IP

configuration as on the primary site.

With this, we had a “single-button” DR solution for the entire

management stamp, including Windows Azure Pack and its resource providers.

-kn