How did God manage to create the world in only 6 days?

-

He had no legacy!

With that, I would like to explain what the new Microsoft

Azure Stack is all about.

As many of you already know, we have all been part of a

journey over the last couple of years where Microsoft is aiming for consistency

across their clouds, covering private, service provider and public.

Microsoft Azure has been the leading star and it is quite

clear with a “mobile first, cloud first” strategy that they are putting all

their effort into the cloud, and later, make bits and bytes available for

on-prem where it make sense.

Regarding consistency, I would like to point out that we

have had “Windows Azure Services for Windows Server” (v1) and “Windows Azure

Pack” (v2) – that brought the tenant experience on-prem with portals and common API’s.

Let us stop there for a bit.

The API’s we got on-prem as part of the service

management APIs was common to the

ones we had in Azure, but they weren’t consistent

nor identical.

If you’ve ever played around with the Azure Powershell

module, you have probably noticed that we had different cmdlets when targeting

an Azure Pack endpoint compared to Microsoft Azure.

For the portal experience, we got 2 portals. One portal

for the Service Provider – where the admin could configure the underlying

resource providers, create hosting plans and define settings and quotas through

the admin API. These hosting plans were made available to the tenants in the

tenant portal with subscriptions, where that portal – was accessing the

resources through the tenant API.

The underlying resource providers were different REST

APIs that could contain several different resource types. Take the VM Cloud

resource provider for example, that is a combination of System Center Virtual

Machine Manager and System Center Service Provider Foundation.

Let us stop here as well, and reflect of what we have

just read.

1)

So far, we have had a common set of APIs between

Azure Pack and Azure

2)

On-prem, we are relying on System Center in

order to bring IaaS into Azure Pack

With cloud consistency in mind, it is about time to point

out that to move forward, we have to get the exact same APIs on-prem as we have

in Microsoft Azure.

Second, we all know that there’s no System Center

components that are managing the Hyper-Scale cloud in Azure

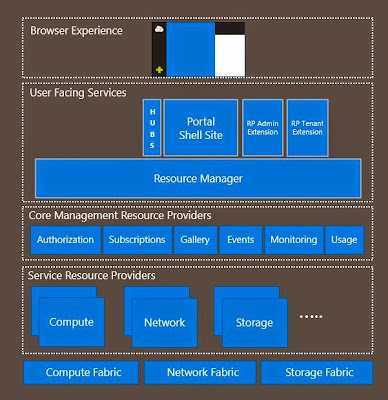

Let us take a closer look at the architecture of

Microsoft Azure Stack

Starting at the top, we can see that we have the same –

consistent browser experience.

The user facing services consists of hubs, a portal shell

site and RP extensions for both admins (service provider) and tenant. This shows

that we won’t have two different portals as we have in Azure Pack today, but

things are differentiated through the extensions.

These components are all living on top of something

called “Azure Resource Manager”, which is where all the fun and consistency for

real is born.

Previously in Azure, we were accessing the Service

Management API when interacting with our cloud services.

Now, this has changed and Azure Resource Manager is the new,

consistent and powerful API that will be managing all the underlying

resource providers, regardless of clouds.

Azure Resource Manager introduces an entirely new way of

thinking about your cloud resources.

A challenge with both Azure Pack and the former Azure

portal was that once we had several components that made up an application, it

was really hard to manage the life-cycle of it. This has drastically changed

with ARM, where we can now imagining a complex service, such as a SharePoint

farm – containing many different tiers, instances, scripts, applications. With ARM,

we can use a template that will create a resource group (a logical group that

will let you control RBAC, life-cycle, billing etc on the entire group of

resources, but you can also specify this at a lower level on the resources

itself) with the resources you need to support the service.

Also, the ARM itself is idempotent, which means it has a

declarative approach. You can already start to imagine how powerful this will

be.

In the context of the architecture of Azure Stack as we

are looking at right now, this means we can:

1)

Create an Azure Gallery Template (.json)

a. Deploy

the template to Microsoft Azure

or/and

b. Deploy

the template to Microsoft Azure Stack

It is time to take a break and put a smile on your face.

Now, let us explain the architecture a bit further.

Under the Azure Resource Manager, we will have several

Core Management Resource Providers as well as Service Resource Providers.

The Core Management Resource Providers consists of

Authorization – which is where all the RBAC settings and policies are living. All

the services will also share the same Gallery now, instead of having separate

galleries for Web, VMs etc as we have in Azure Pack today. Also, all the

events, monitoring and usage related settings are living in these core

management resource providers. One of the benefits here is that third parties

can now plug in their resource providers and harness the existing architecture

of these core RPs.

Further, we have currently Compute, Network and Storage

as Service Resource Providers.

If we compare this with what we already have in Azure

Pack today through our VM Cloud Resource Provider, we have all of this through a

single resource provider (SCVMM/SCSPF) that basically provides us with

everything we need to deliver IaaS.

I assume that you have read the entire blog post till

now, and as I wrote in the beginning, there’s no System Center components that

are managing Microsoft Azure today.

So why do we have 3 different resource providers in Azure

Stack for compute, network and storage, when we could potentially have

everything from the same RP?

In order to leverage the beauty of a cloud, we need the

opportunity to have a loosely coupled infrastructure – where the resources and

different units can scale separately and independent of each other.

Here’s an example of how you can take advantage of this:

1)

You want to deploy an advanced application to an

Azure/Azure Stack cloud, so you create a base template containing the common

artifacts, such as image, OS settings etc

2)

Further, you create a separate template for the

NIC settings and the storage settings

3)

As part of the deployment, you create references

and eventually some “depends-on” between these templates so that everything

will be deployed within the same Azure Resource Group (that shares the same

common life-cycle, billing, RBAC etc)

4)

Next, you might want to change – or eventually

replace some of the components in this resource group. As an example, let us

say that you put some effort into the NIC configuration. You can then delete

the VM (from the Compute RP) itself, but keep the NIC (in the Network RP).

This gives us much more flexibility compared to what we

are used to.

Summary

So, Microsoft is for real bringing Azure services to your datacenters now, as part of the

2016 wave that will be shipped next year. The solution is called “Microsoft

Azure Stack” and won’t “require” System Center – but you can use System Center

if you want for managing purposes etc., which is probably a very good idea.

It is an entirely new product for you datacenter – which is

a cloud-optimized application platform, using Azure-based compute, network and

storage services

In the next couple of weeks, I will write more about the

underlying resource providers and also how to leverage the ARM capabilities.

Stay tuned for more info around Azure Stack and Azure

Resource Manager.

No comments:

Post a Comment