In these days, you are most likely looking for solutions

where you can leverage powershell to gain some level of automation no matter if

it’s on premises or in the cloud.

I have been writing about the common service management

API in the Cloud OS vision before, where Microsoft Azure and Azure Pack is

sharing the same exact management API.

In this blog post, we will have a look at the tenant

public API in Azure Pack and see how to make it available for your tenants and

also how do some basic tasks through powershell.

Azure Pack can either be installed with the express setup

(all portals, sites and API’s on the same machine) or distributed, where you

have dedicated virtual machines for each portal, site and components. By having

a look at the API’s only, you can see that we have the following:

Windows Azure Pack

and its service management API includes three separate components.

·

Windows Azure Pack: Admin API (Not publicly

accessible). The Admin API exposes functionality to complete administrative

tasks from the management portal for administrators or through the use of

Powershell cmdlets. (Blog

post: http://kristiannese.blogspot.no/2014/06/working-with-admin-api-in-windows-azure.html )

·

Windows Azure Pack: Tenant API (Not publicly

accessible). The Tenant API enables users, or tenants, to manage and configure

cloud services that are included in the plans that they subscribe to.

·

Windows Azure Pack: Tenant Public API (publicly

accessible). The Tenant Public API enables end users to manage and configure

cloud services that are included in the plans that they subscribe to. The Tenant

Public API is designed to serve all the requirements of end users that

subscribe to the various services that ha hosting service provider provides

Making the Tenant

Public API available and accessible for your tenants

Default, the Tenant Public API is installed on port 30006

– which means it is not very firewall friendly.

We have already made the tenant portal and the

authentication site available on port 443 (described by Flemming in this blog

post: http://flemmingriis.com/windows-azure-pack-publishing-using-sni/

), and now we need to configure the tenant public API as well.

1) Create

a DNS record for your tenant public API endpoint.

We will need to have a DNS registration for the API. In our

case, we have registered “api.systemcenter365.com” and are ready to go.

2) Log

on to your virtual machine running the tenant public API.

In our case, this is the same virtual machine that runs

the rest of the internet facing parts, like tenant site and tenant

authentication site. This means that we have already registered

cloud.systemcenter365.com and cloudauth.systemcenter365.com to this particular

server, and now also api.systemcenter365.com.

3) Change

the bindings on the tenant public API in IIS

Navigate to IIS and locate the tenant public API. Click bindings,

and change to port 443, register with your certificate and also type the

correct hostname that the tenants will be using when accessing this API.

4) Reconfigure

the tenant public API with Powershell

Next, we need to update the configuration for Azure Pack

using powershell (accessing the admin

API).

The following cmdlet will change the tenant public API to

use port 443 and host name “api.systemcenter365.com”.

Set-MgmtSvcFqdn –Namespace TenantPublicAPI –FQDN “api.systemcenter365.com”

–Connectionstring “Data Source=sqlwap;Initial

Catalog=Microsoft.MgmtSvc.Store;User Id=sa;Password=*” –Port 443

That’s it! You are done, and have now made the tenant

public API publicly accessible.

Before we proceed, we need to ensure that we have the

right tools in place for accessing the API as a tenant.

It might be quite obvious for some, but not everyone. To be

able to manage Azure Pack subscriptions through Powershell, we basically need

the powershell module for Microsoft Azure. That is right. We have a bunch of

cmdlets in the Azure module for powershell that is directly related to Azure

Pack.

You can read more about the Azure module and download it

by following this link: http://azure.microsoft.com/en-us/documentation/articles/install-configure-powershell/

Or simply search for it if you have Web Platform

Installer in place on your machine.

Deploying a

virtual machine through the Tenant Public API

Again, if you are familiar with Microsoft Azure and the

powershell module, you have probably been hitting the “publishsettings” file a

couple of times.

Normally when logging into Azure or Azure Pack, you reach

for the portal, get redirected to some authentication site (can also be ADFS if

not using the default authentication site in Azure Pack) and then sent back to

the portal again which in our case is cloud.systemcenter365.com.

The same process will take place if you are trying to

access the “publishsettings”. Typing https://cloud.systemcenter365.com/publishsettings

in the internet explorer will first require you to logon and then you will have

access to your published settings. This will download a file for you that

contains your secure credentials and additional information about your

subscription for use in your WAP environment.

Once download, we can open the file to explore the

content and verify the changes we did when making the tenant public API

publicly accessible in the beginning of this blog post.

Picture api content

Next, we will head over to Powershell to start exporing

the capabilities.

1) Import

the publish settings file using Powershell

Import-WAPackPublishSettingsFile “C:\MVP.Publishsettings”

Make sure the cmdlet fits your environment and points to

the file you have downloaded.

2) Check

to see the active subscriptions for the tenant

Get-WAPackSubscription | select SubscriptionName, ServiceEndpoint

3) Deploy

a new virtual machine

To create a new virtual machine, we first need to have

some variables that stores information about the template we will use and the

virtual network we will connect to, and then proceed to create the virtual machine.

4) Going

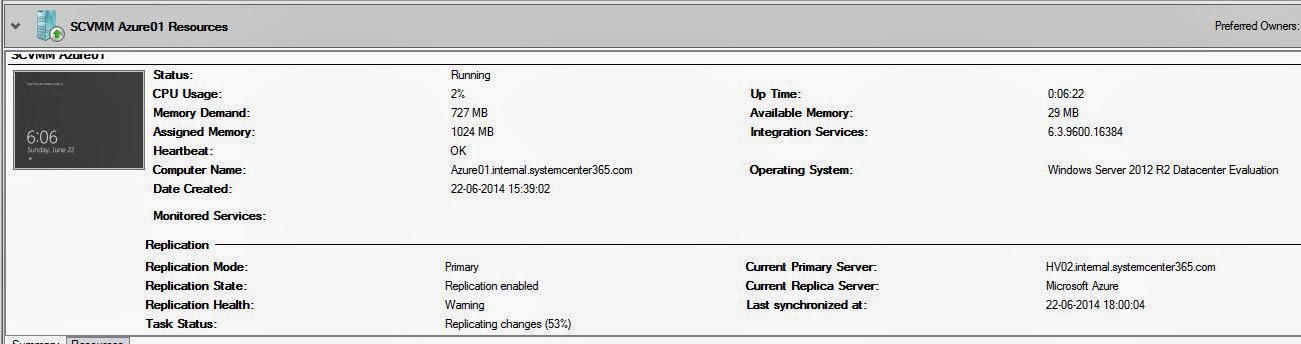

back to the tenant portal, we can see that we are currently provisioning a new

virtual machine that we initiated through the tenant public API