When your tenants are using VLANs instead of SDN

Ever since the release of Windows Azure Pack, I’ve been a

strong believer of software-defined datacenters powered by Microsoft

technologies. Especially the story around NVGRE has been interesting and

something that Windows Server, System Center and Azure Pack are really

embracing.

If you want to learn and read more about NVGRE in this

context, I recommend having a look at our whitepaper: https://gallery.technet.microsoft.com/Hybrid-Cloud-with-NVGRE-aa6e1e9a

Also, if you want to learn how to design a scalable

management stamp and turn SCVMM into a fabric controller for your multi-tenant

cloud, where NVGRE is essential, have a look at this session: http://channel9.msdn.com/Events/TechEd/Europe/2014/CDP-B327

The objective of this blog post is to:

·

Show how you should design VMM to deliver – and use

dedicated VLANs to your tenants

·

Show how to structure and design your hosting

plans in Azure

·

Customize the plan settings to avoid confusion

How to design VMM

to deliver – and use dedicated VLANs to your tenants

Designing and implementing a solid networking structure

in VMM can be quite a challenging task.

We normally see that during setup and installation of

VMM, people don’t have all the information they need. As a result, they have

already started to deploy a couple of hosts before they are actually paying

attention to:

1) Host

groups

2) Logical

networks

3) Storage

classifications

Needless to say, it is very difficult to make changes to

this afterwards when you have several objects in VMM with dependencies and deep

relationship.

So let us just assume that we are able to follow the

guidelines and pattern I’ve been using in this script:

The fabric controller script will create host groups

based on physical locations with child host groups that contains different

functions.

For all the logical networks in that script, I am using “one

connected network” as the network type.

This will create a 1:Many mapping of the VM network to

each logical network and simplify scalability and management.

For the VLANs networks though, I will not use the network

type of “one connected network”, but rather use “VLAN-based independent

networks”.

This

will effectively let me create a 1:1 mapping of a VM network to a specific

VLAN/subnet within this logical network.

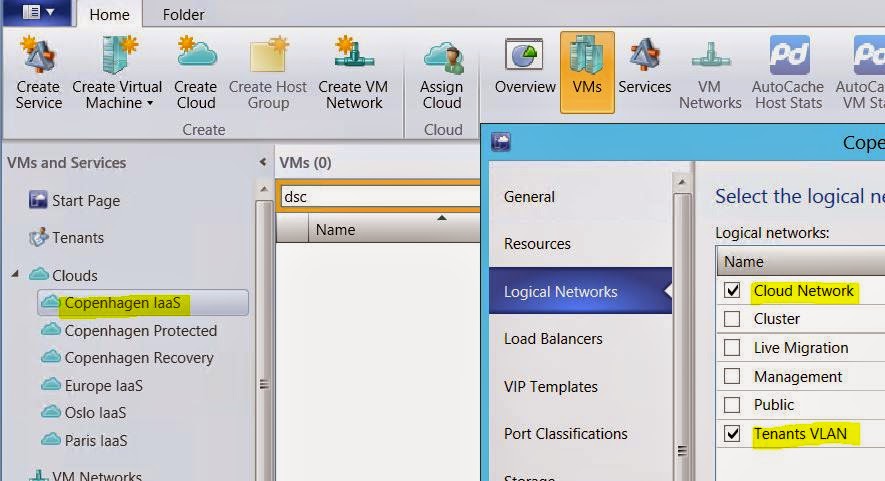

The following screenshot shows the mapping and the design

in our fabric.

Now the big question: why VLAN-based independent network

with a 1:1 mapping of VM network and VLAN?

As I will show you really soon, the type of logical

network we use for our tenant VLANs gives us more flexibility due to isolation.

When we are adding the newly created logical network to a

VMM Cloud, we simply have to select the entire logical network.

But when we are

creating Hosting Plans in Azure Pack admin portal/API, we can now select the

single and preferred VM Network (based on VLAN) for our tenants.

The following screenshot from VMM shows our Cloud that is

using both the Cloud Network (PA network space for NVGRE) and Tenants VLAN.

So once we have the logical network enabled at the cloud

level in VMM, we can move into the Azure Pack section of this blog post.

Azure Pack is multi-tenant by definition and let you –

together with VMM and the VM Cloud resource provider, scale and modify the

environment to fit your needs.

When using NVGRE as the foundation for our tenants, we

are able to use Azure Pack “out of the box” and have a single hosting plan –

based on the VMM Cloud where we added our logical network for NVGRE, and

tenants can create and manage their own software-defined networks. For this, we

only need a single hosting plan as every tenant is isolated on their own

virtualized network.

Of course – there might be other valid reasons to have

different hosting plans, such as SLA’s, VM Roles and other service offerings. But

for NVGRE, everyone can live in the same plan.

This changes once you are using VLANs. If you have a dedicated

VLAN per customer, you must add the dedicated VLAN to the hosting plan in Azure

Pack. This will effectively force you to create a hosting plan per tenant, so

that they are not able to see/share the same VLAN configuration.

The following architecture shows how this scales.

In the hosting plan in Azure Pack, you simply add the

dedicated VLAN to the plan and it will be available once the tenant subscribe

to this subscription.

Bonus info:

With the update rollup 5 of Azure Pack, we have now a new

setting that simplifies the life for all the VLAN tenants out there!

I’ve always said that “if you give people too much

information, they’ll ask too many questions”.

It seems like the Azure Pack product group agree on this,

and we have now a new setting at the plan level in WAP that says “disable

built-in network extension for tenants”.

So let us see how this looks like in the tenant portal

when we are accessing a hosting plan that:

a) Provides

VM Clouds

b) Has

the option “disable built-in network extension for tenants” enabled

This will ease on the confusion for these tenants, as

they were not able to manage any network artefacts in Azure Pack when VLAN was

used. However, they will of course be able to deploy virtual machines/roles into the VLAN(s) that are available

in their hosting plan.