- This is fantastic, but will this replace the need for a backup of our VMs ?

No, absolutely not. And I`ll stress in this post why you should not use Snapshots in production, and what the consequences will be.

Instead of just point at the reasons for why we should not use snapshots, I will also try to explain how it works, when you should consider a snapshot, and some common pitfalls.

Virtual Machine Snapshots – How it works

As stated earlier in this post, you can capture state and configuration of a VM at any point in time.

You can also create multiple snapshots, delete snapshots, and apply snapshots.

In Hyper-V manager, you can right-click a VM, and take a snapshot when the current state of the VM is:

· On

· Off

· Saved

Let`s take a look what happens when you initiate a snapshots of a running VM:

1. The VM pauses

2. An AVHD is created for the snapshot

3. The VM is configured

4. The VM is pointed to the newly created AVHD

5. The VM resumes (Point 1 and 5: This would not affect the end user)

6. While the VM is running, the contents of the VM memory are saved to disk. (If a guest OS attempts to modify memory that has not yet been copied, the write attempt is intercepted and copied to the original memory contents)

7. Now that the Snapshot is completed, the VM configuration, saved states files, and AVHD are stored in a folder under the VM snapshots directory.

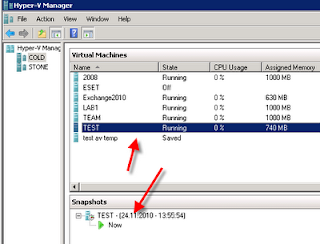

1. You initiate a snapshot In Hyper-V Manager. Right click a VM, and select ‘Snapshot’

2. The process starts

3. The snapshot is now completed

3. The snapshot is now completed4. If we take a look at the settings of the VM virtual disk, we see that the newly created AVHD is the current disk for this vm. Also note the type “Differencing virtual hard disk”.

If we take another snapshot now, we will have another AVHD file that contains the changes of the VM since the last snapshot (AVHD).

If we browse explorer to find the Virtual Hard Disks - folder for this VM, we see the following:

What happens when you apply a Virtual Machine Snapshot?

When you apply a snapshot, you literally copy the entire VM state from the selected snapshot to the active VM. This will return your current working state to the previous snapshot state. In other words, you will lose every changes, configuration, data, and so on if you don’t take a new snapshot of the current state before applying the selected snapshot.

The process:

1. The VM saved state files (.bin, .vsv) are copied

2. A new AVHD is created and then linked to the parent AVHD

Applying a previous snapshot creates another snapshot to your hierarchy, starting at the applied snapshot

And last, what happens when you delete a Virtual Machine Snapshot?

When you delete a snapshot, you also delete all the saved state files (.bin and .vsv)

The process:

1. The copy of the VM configuration taken during the snapshot process is removed

2. The copy of the VM memory taken during the snapshot process is removed

3. When the VM is powered off, the contents of any deleted AVHDs are merged with its parent (VHD)

Ok, so now we some basic facts about the Snapshot process.

What is best practice, and my personally recommendations ?

Don’t use snapshot in production.

The root reason for this is the downtime required for merging the snapshots.

If you create a snapshot of a VM with the default IDE size 127GB disk, you can consume additional 127GB of your storage. And this contiunes for the next snapshot, and so on.. Snapshots requires storage, and if you don’t have the free storage required and available when merging your snapshots, this will cause the merging process to fail. (In Hyper-V 2008 R2, you can take a new snapshot, export that snapshot, and import it as a VM – that will in some situations save you, or at least shorten the merge process)

So the keyword here is: Storage, and downtime.

The merge process is writing the VMs history back to the VHD file, and that can take hours. You don’t want to find yourself in that situation (believe me J ).

Snapshots are great for testing, and give you the ability to test and devastate your VMs.

But also for testing, I would not recommend using snapshots on a DC, even it`s in a test environment.

That may lead to inconsistency of the Active Directory Database, and as you know – every part of an Active Directory Domain would be affected.

Another tip is to try to name your snapshots with some useful names, so that when you have a snapshot-hierarchy you are able to sort them to find out what is what.

And last, let the Hyper-V manager take care of your snapshots. Don’t rename, edit, move, replace, or delete the associated snapshot files.

Drive carefully when considering snapshots, and have fun.